Moxin x llama.cpp Customized Quant for Qwen3-Next-80B-A3B-Instruct

We sincerely thank the open-source community developers and contributors unsloth for providing BF16 version and imatrix file.

We really appreciate the attention and we’re also happy to share additional quantization variants for everyone to try out and experiment with — hope you enjoy them!

- MXFP4_MOE : 40.73 GiB (4.39 BPW)

- Q8_0 : 78.98 GiB (8.52 BPW)

- Other Quant Versions (TBD)

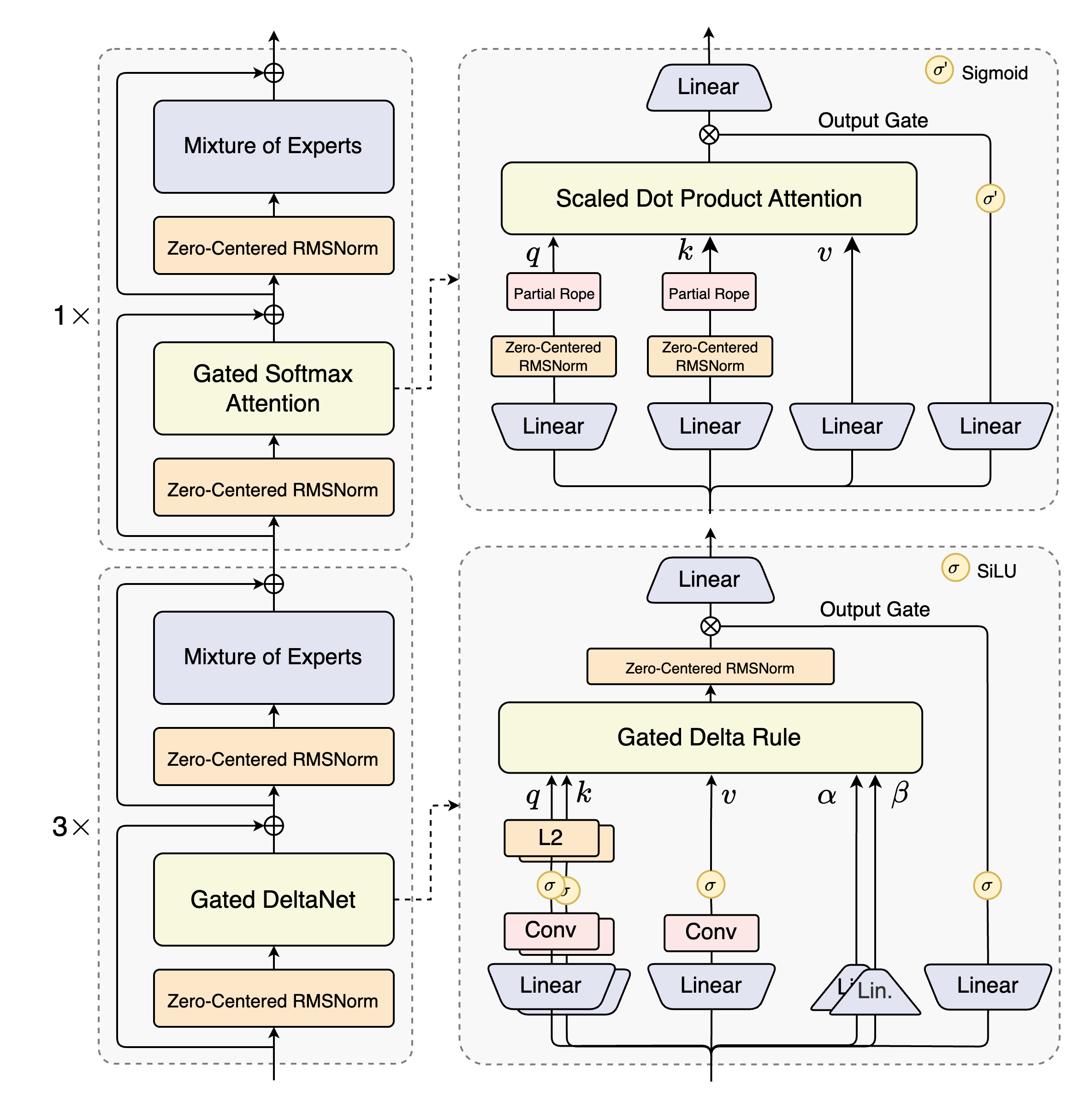

Note: As shown below, Qwen3-Next features a highly distinctive MoE architecture, markedly different from existing MoE implementations. Because of this uniqueness, we are providing only the most reliable and broadly compatible quantization options at this stage.

👈 Download Guide

huggingface-cli download moxin-org/Qwen3-Next-80B-A3B-Instruct-GGUF --include "*MXFP4_MOE*" --local-dir ./Qwen3-Next-80B-A3B-Instruct-GGUF

# !pip install huggingface_hub hf_transfer

import os

# os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

from huggingface_hub import snapshot_download

snapshot_download(

repo_id = "moxin-org/Qwen3-Next-80B-A3B-Instruct-GGUF",

local_dir = "Qwen3-Next-80B-A3B-Instruct-GGUF",

allow_patterns = ["*MXFP4_MOE*"],

)

Download Available for huggingface_hub, huggingface-cli, snapshot_download, xet.

Usage

Example of runing gguf with local build of llama.cpp. (llama-cli/llama-server)

👈 Build llama.cpp locally

git clone https://github.com/ggml-org/llama.cpp.git

cd llama.cpp

# -DLLAMA_CURL=OFF if error

cmake -B build -DGGML_CUDA=ON -DBUILD_SHARED_LIBS=OFF

cmake --build build --config Release -j --clean-first

build/bin/llama-cli -m Qwen3-Next-80B-A3B-Instruct-GGUF/MXFP4_MOE/Qwen3-Next-80B-A3B-Instruct-MXFP4_MOE.gguf \

-ngl 99 \

--temp 0.7 \

--top-k 20 \

--top-p 0.8 \

--min-p 0.00 \

--presence-penalty 1.0 \

--ctx-size 16384 \ # 4096, 8192

Citation

If this work is helpful, please kindly helpe cite as:

@article{chen2025collaborative,

title={Collaborative Compression for Large-Scale MoE Deployment on Edge},

author={Chen, Yixiao and Xie, Yanyue and Yang, Ruining and Jiang, Wei and Wang, Wei and He, Yong and Chen, Yue and Zhao, Pu and Wang, Yanzhi},

journal={arXiv preprint arXiv:2509.25689},

year={2025}

}

Acknowledgements

This repository builds upon the outstanding work of the following open-source authors and projects:

- Qwen/Qwen3-Next-80B-A3B-Instruct

- ggml-org/llama.cpp, unsloth.ai, bartowski.

- ikawrakow/ik_llama.cpp, ikawrakow, ubergarm.

We sincerely thank them for their excellent contributions to the open-source community.

- Downloads last month

- 639

4-bit

8-bit

Model tree for moxin-org/Qwen3-Next-80B-A3B-Instruct-GGUF

Base model

Qwen/Qwen3-Next-80B-A3B-Instruct